Late one evening last week, a freelance developer was in a cramped London café wrestling with a stubborn codebase. His screen glowed with half-finished functions, browser tabs sprawling like an untidy desk.

He typed a prompt into Claude. ai, “Refactor this Node.js middleware for rate limiting, sliding window, production-ready”, and hit enter.

Seconds later, clean code scrolled out: efficient, idiomatic, with edge-case handling he hadn’t even specified. Claude Sonnet 4.6 is slipping into real workflows, making the impossible feel routine.

This model, launched by Anthropic on February 17, 2026, marks a pivot. It’s now the default for free and Pro users on claude.ai and Claude Cowork, blending frontier smarts with everyday pricing.

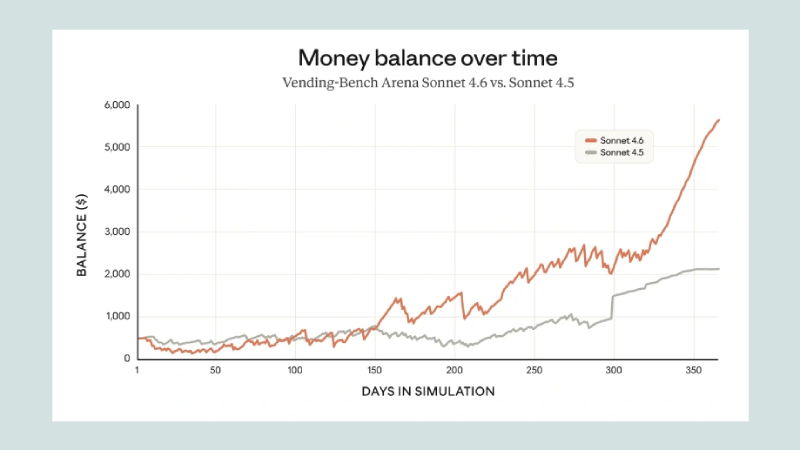

Developers in early access prefer it over predecessors, and sometimes even Opus 4, for its reliability on office tasks, coding marathons, and agentic planning. But as with any tool this potent, the shine comes with shadows: higher token hunger, ecosystem ties, and the subtle grind of over-reliance.

Why Claude Sonnet 4.6 Feels Like a Breakthrough

Sonnet 4.6 excels where work gets messy.

- Picture a small business owner debugging a Shopify integration at midnight; the model reads the full error log, suggests fixes, and even mocks tests.

- Students cramming for coding interviews get step-by-step breakdowns that build real skills, not just answers.

- Young creators scripting TikTok automations or financial models see polished outputs, better layouts, animations, and fewer revisions.

- Families using Claude in Excel to budget via S&P connectors, pulling live data without leaving sheets.

The gains stem from iterative training.

Coding improvements mean it anticipates needs: in one test, it refactored PHP JSON parsing portably across versions, dodging GPT-5’s hallucinated functions.

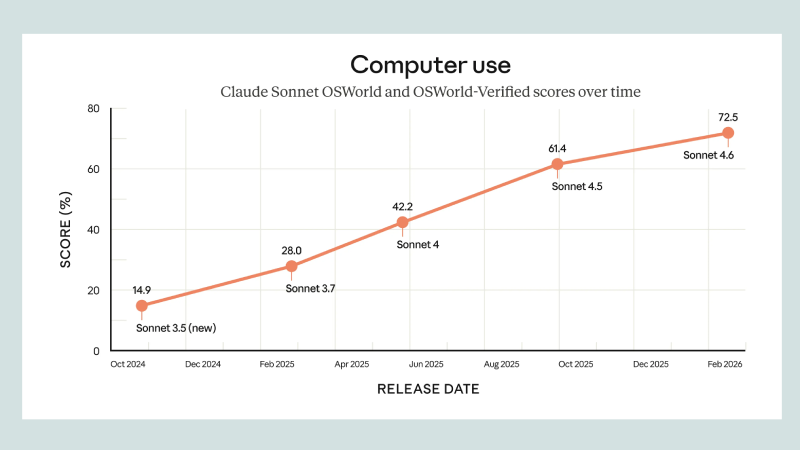

Computer use, pioneered by Anthropic in 2024, now handles multi-tab chaos competently, though it trails elite humans on ambiguity.

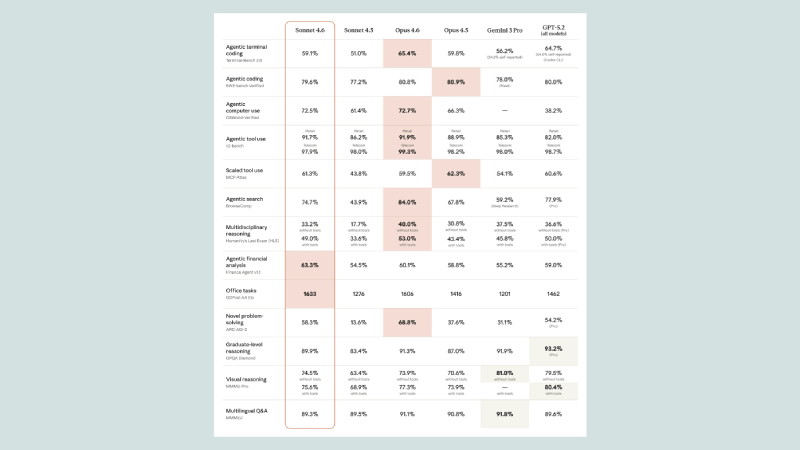

Benchmarks back it: second on Artificial Analysis Intelligence Index (51 points, edging GPT-5.2), topping agentic tasks like GDPval-AA.

The Trade-Offs You Won’t Hear in Press Releases

No tool this advanced lacks friction.

- Token inefficiency bites hardest, with 74 million output tokens for benchmarks versus 25 million for Sonnet 4.5, inflating real costs despite $3/$15 per million pricing (input/output).

- A full eval run tallied $2,088, only modestly below Opus 4.6’s $2,486.

- Generation lags too: 8.2 seconds average in developer benches, slower than GPT-5’s 6.9.

- The learning curve steepens with adaptive thinking (low-to-max effort) and features such as context compaction and tool chains (web search, code execution).

- Free tier upgrades, file creation, skills, tempt, but Pro ($20/month) or API lock-in favors committed users.

Prompt injections remain a vector, mitigated but not erased. Why master regex when AI handles it flawlessly?

Real-World Ripples Across Lives

- For students, it’s a tutor that scales, 1M context swallows lecture notes, spitting practice problems. Budget types love free access, but heavy use nudges toward paid.

- Young creators thrive on design polish for apps or visuals, iterating quickly to production.

- Small business owners automate legacy CRM navigation, saving hours weekly.

- Families gain from Excel add-ins, though non-techies hit walls without simple prompts.

Exclusions hurt:

- Non-English speakers face subtle biases.

- Those in API deserts (no Vertex/Bedrock) wait.

- It favors coders and planners, sidelining pure creatives needing Opus depth.

Pricing and Accessibility Breakdown

| Plan | Cost | Key Limits | Best For |

| Free | $0 | Basic usage, 1M context beta | Students, trials |

| Pro | ~$20/mo | Higher limits, Cowork/Code | Creators, freelancers |

| Team/Enterprise | Custom | Unlimited, connectors | Businesses |

| API | $3/$15 per 1M tokens (I/O) | Scales with volume | Developers |

What Marketing Skips: The Hidden Costs

Ads tout benchmarks; reality demands prompt engineering time, debugging AI errors (rare, but they happen), and Anthropic ecosystem buy-in.

No seamless OpenAI migration. Subscriptions compound if stacking tools.

Effort: 30-60 minutes weekly tweaking for peak output.

Decision Checklist

This is for you if…

- You code daily and hate iteration loops.

- Budget allows $20-100/month for productivity multipliers.

- Legacy software plagues your workflow.

- You’re building agents or long-context apps.

You should skip if…

- Pure cost rules (stick to Sonnet 4.5).

- You need rawest reasoning (Opus 4.6).

- Non-technical; interfaces overwhelm.

- Token budgets are ironclad.

Final Thoughts

Sonnet 4.6 offers a subtle yet effective performance that improves over time. Try it for yourself on claude.ai and evaluate how well your prompts align with its capabilities. A good tool adapts to your needs, rather than forcing you to adjust to it. It’s important to choose the right tool for the job, one that molds its output to your requirements. The decision is yours—find the right balance and experience the flexibility and power that comes with the right tool. Give it a try and see how it elevates your work.