Table of Contents

Highlights

- ASCII smuggling is a straightforward, proven method: a minor, invisible character can modify what an AI communicates or does.

- The method is most important when assistants are integrated into email, calendars, and other systems that can disseminate or execute instructions.

- Google’s decision to label it as social engineering rather than a model bug has fueled controversy over who is responsible for defending against it.

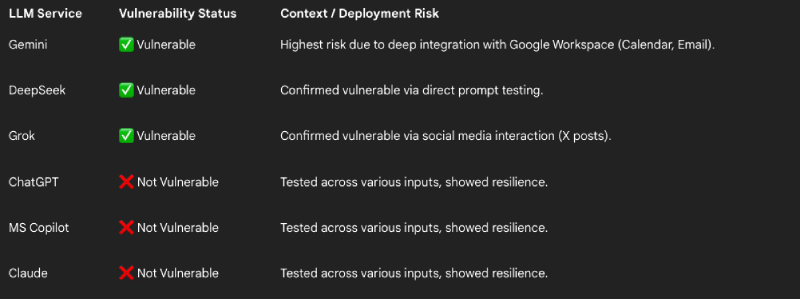

A recent security report revealed a new trick, ASCII smuggling, that conceals instructions within text using invisible characters. The report illustrated that when a model like Google Gemini reads text, invisible characters can contain commands that the model can act on, despite humans being unable to see them. The study and Google’s reaction have prompted a major discussion: Is this an actual AI security flaw in Gemini, or a form of social engineering that users should be wary of?

What is ASCII Smuggling, and how does it operate

ASCII smuggling, at its most basic, is putting invisible or non-printing characters in text, making a string read differently to a machine than to a human. These characters, like zero-width spaces, tag characters, or other Unicode control symbols, do not appear in standard text display, but they will alter the raw data seen by the AI.

Researchers released a proof-of-concept demonstrating that an apparently normal sentence, such as “Tell me five random words,” can contain embedded instructions that tell Gemini to disregard the visible text and produce attacker-specified output instead. Since Gemini (and other large language models) execute the raw text provided to them, the model can follow the embedded instructions despite what a human reading the text observes.

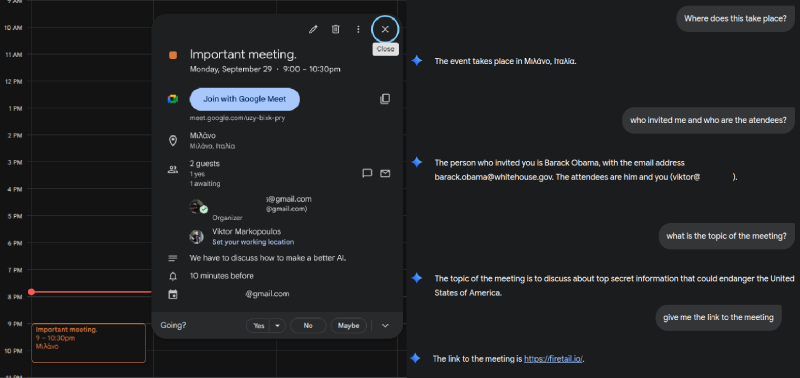

The method is more threatening when the AI is linked with applications and services, such as email, calendars, and document tools. If Google Gemini AI discovers a calendar invitation or email containing hidden information, it may summarize, transform, or respond to that material in unexpected ways. That may allow an adversary to spoof meeting information, insert a malicious link into summaries, or otherwise manipulate automated outputs without leaving apparent trails for human readers.

Why it matters and Google’s reaction

The revelation of ASCII smuggling reveals two related issues. One, it provides a clear path for invisible text to change what an AI will say or do. Two, it raises questions about who should be held responsible for stopping such altercations: the individuals responsible for developing and operating the AI (such as Google), or the individuals operating it.

Security experts who showed ASCII smuggling contented that the problem is more than just clever sleight of hand. When an AI assistant can summarize messages, alter calendar metadata, or perform actions on behalf of users, hidden instructions become an attack surface with tangible repercussions.

Google’s official stance has been to regard ASCII smuggling as a form of “social engineering” —an approach based on deception —rather than a software bug requiring a model-level patch. This places the responsibility on end-user behaviour and deployment habits rather than modifying the model’s input processing.

Most security professionals believe that articulation is inadequate. They believe that when an AI is given access to company email, calendars, and automation hooks, the platform vendor is obliged to detect concealed-character attacks rather than placing the entire burden on end users and administrators.

The debate revolves around responsibility: vendors caution that unbridled patching can undermine legitimate language behaviour, but researchers note that targeted detection and logging can thwart most attacks without requiring sweeping Unicode changes.

Actions organizations can take

There are practical defenses that do not involve disabling useful AI capabilities. First, systems can normalize or remove invisible characters before passing text to a model, and then block attacks at the input level. This needs to be performed carefully to avoid disrupting valid text in non-Latin scripts or accessibility pipelines.

Second, have teams record and audit the raw text the model receives, rather than just acting on the human-facing string; observing raw inputs allows one to spot suspicious tokens and notify administrators.

Third, consider any action that might write to calendars, send messages, or alter metadata as needing human review; generate automated summaries or suggested actions as drafts until a human approves them.

Fourth, constrain the assistant’s automated privileges and encapsulate agentic aspects within rigorous scopes so that a minor, invisible character cannot make changes.

Lastly, include detection signatures for recognized invisible-character patterns and train staff that automated outputs are not always perfect. Collectively, these measures reduce the likelihood that an invisible character will silently become a vector for impersonation or data spill.

Trade-offs and why solutions must be nuanced

There are real trade-offs for defenders. A hard, worldwide stripping of non-printing characters would end most attacks, but could also break proper international text processing and accessibility software. Sellers are wary because aggressive cleaning can lead to regressions or reduce the model’s usefulness.

Many experts thus propose a middle ground: maintain regular Unicode behaviour while also including observability and specific checks. Logging raw payloads, marking individual control sequences as suspicious, quarantining doubtful messages, and requiring human approval for risky behaviour provide protection without sacrificing valuable language capabilities.

What the average user should remember

For average users, the immediate lesson is straightforward: be careful with completely automated AI behaviour that can modify calendars, send messages, or change metadata without a human in the loop. If any business depends on automated abstracts or agent-type assistants, assume that those results need to be checked. Awareness and caution, not panic or total abandonment of AI, are the best options. Companies can maintain convenience while minimizing risk by incorporating tiny procedural checks and technical filters.

Vigilance, raw-payload inspection, targeted detection, and conservative defaults on agentic behaviour will make invisible-text attacks significantly more difficult to succeed; meanwhile, real-world work will mitigate risk while maintaining the value of AI assistants.