Table of Contents

Highlights

- Chinese company Huawei has announced that the DeepSeek V3/R1 model has been optimized to be used on their cloud services.

- Additionally, Huawei has launched a hybrid, cloud-based DeepSeek on-premise deployment solution.

- The announcement went live on the 12th of February, 2025.

Chinese company Huawei has announced that the DeepSeek V3/R1 model has been optimized to be used on their Huawei Cloud services. Ever since it was introduced into the AI space, DeepSeek has disrupted the industry with its unprecedented price-to-performance ratio. Utilizing high-performance graphics processing chips for tech giant Nvidia, the company has now partnered up with Huawei Technologies, providing its AI models to cloud servers that are powered by Huawei chips.

DeepSeek partners with Huawei Technologies

In a report by South China Morning Post, we find that Huawei Technologies’ cloud computing subsidiary has undertaken a collaboration with Beijing-based AI startup SiliconFlow. The venture brings DeepSeek’s AI models to end users via Huawei’s Ascend cloud service. This move comes as the open-source models are gaining mass popularity both amidst Western and Chinese audiences.

Moreover, industry giants such as Microsoft and Amazon have also added support for Deepseek’s R1 model. Platforms such as Azure cloud-computing and GitHub now support the AI model, while developers can also utilize the model through Amazon Web Service.

DeepSeek V3/R1 on Huawei Cloud

The announcement made by Huawei declares that the flagship model of DeepSeek (V3/R1 671B), has been thoroughly adapted and optimized based on Huawei Cloud Ascend cloud services. They also claim that this move was made in order to meet the needs of commercial business deployment. The Ascend Cloud Service has been made compatible with the following DeepSeek series of Models:

- DeepSeek-V3, 671B

- DeepSeek-R1, 671B

- DeepSeek-R1-Distill-Qwen-14B, 14B

- DeepSeek-R1-Distill-Qwen-32B, 32B

- Deep Seek-R1-Distill-LLama-8B, 8B

What are the DeepSeek models

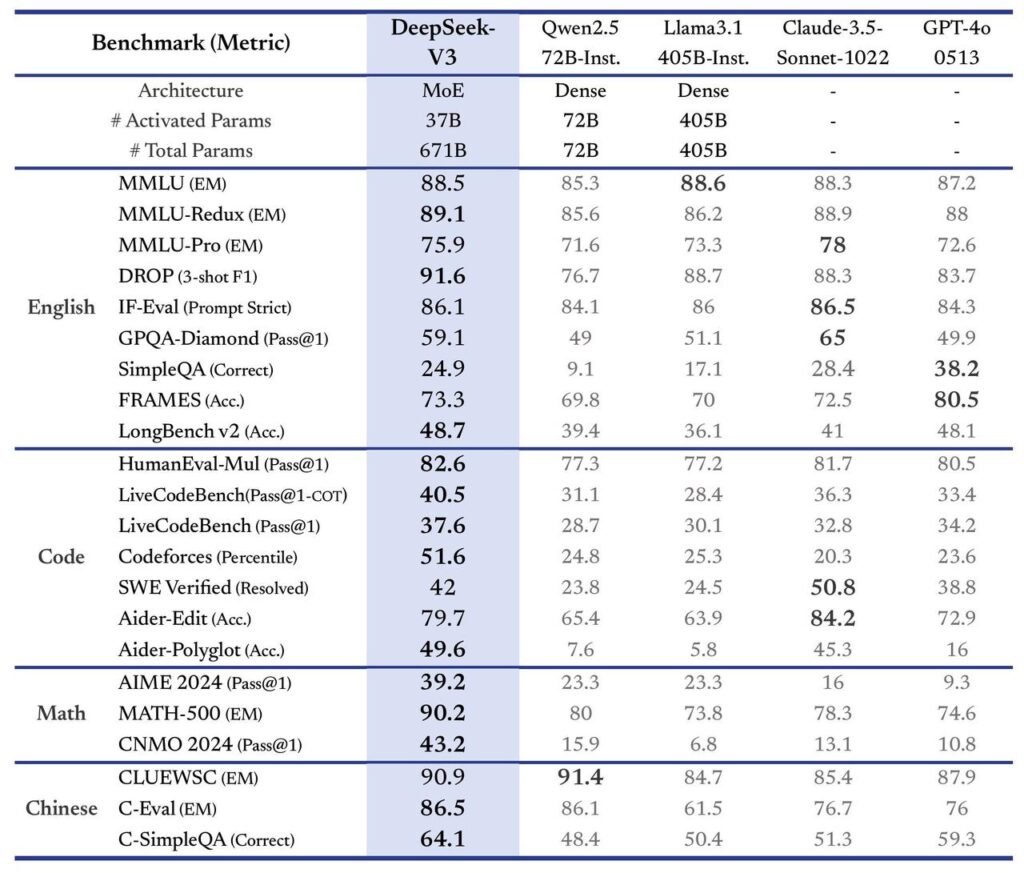

While both V3 and R1 models share a foundation in the Mixture of Expert (MoE) architecture, they diverge paths when it comes to design philosophies, capabilities, and application. The R1 can be described as an advanced AI model designed primarily for high-speed processing, logical thought, and for content generation across various applications. It excels in mathematics, problem-solving, and logical reasoning. This differs from V3, which is a general-purpose large language model with a greater emphasis on scale and efficiency.

The DeepSeek-R1-Distill-Qwen, on the other hand, is a series of distilled large language models derived from Quen 2.5 that utilize outputs from the larger DeepSeek R1 model. Designed to be more efficient and compact, they retain stronger performance, especially in reasoning tasks. Similarly, DeepSeek-R1-Distill-LLama is another distilled version of DeepSeek R1, designed to operate within an 8-billion parameter framework. It stands to deliver competitive performance on tasks related to mathematics, code, and logical reasoning, all the while maintaining low computational demands.

With these additions, Huawei Cloud Stack will be able to provide a graphical third-party model deployment solution, allowing users to deploy DeepSeek models into a hybrid cloud environment based on wizard-based operations, providing support for versions such as DeepSeek V3 and R1. Currently, the V3 model can support 60 tokens/second, which is thrice as fast as the V2. It boasts enhanced capabilities along with intact API compatibility, and of course, its open-source nature. Trained on 14.8T high-quality tokens, it has 671B total parameters with 37B activated for each token.

DeepSeek models cost less than a US dollar.

SiliconFlow has stated that the charge for DeepSeek’s V3 AI model on its platform has been discounted to 1 Yuan. Providing users with 1 million input tokens would cost approximately $0.14, roughly. In India, the model is priced at INR 1 per million tokens as of February. This generally encourages widespread adoption of the AI model within the already growing atmosphere.

Despite the several bans DeepSeek has faced from US states and companies, it still receives an overwhelming server demand. This is proof that AI advancements are at an all time high, and the demand for such facilities are expected to rise as time goes on.