Table of Contents

Highlights

- NVIDIA RTX PRO Servers deliver up to 45× performance gains, transforming enterprise AI, simulation, and design workloads with Blackwell architecture.

- Offers seamless on-premise and cloud integration with support from leading OEMs like Dell, Cisco, HPE, Lenovo, and Google Cloud.

- Provides a robust, scalable, and energy-efficient platform, enabling businesses to transition smoothly into the AI-driven era.

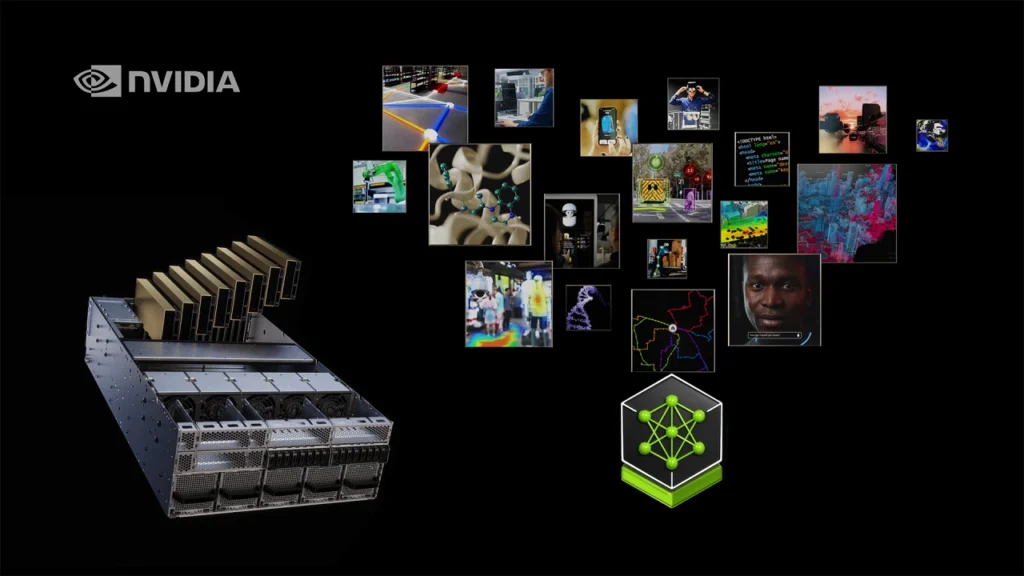

On August 26, 2025, NVIDIA released RTX PRO Servers, powered by the RTX PRO 6000 Blackwell Server Edition GPU, to help organizations transition from traditional clusters to AI factories—without the need to tear down and rebuild AI data centers. The announcement stated that some of the enterprise clients deploying NVIDIA RTX PRO Servers include Disney, Foxconn, Hitachi Ltd., Hyundai Motor Group, Lilly, SAP, and TSMC, with sufficient compute power to accelerate a wide range of AI, design, simulation, and business workloads.

Blackwell Architecture Provides Performance Gains Across Nearly All Workloads. RTX PRO Servers use the Blackwell architecture, giving universal acceleration across:

- Agentic AI (AI agents), generative AI, and large language models

- Physical AI, robotics, digital twins, synthetic data generation

- Scientific computing, simulation, advanced design, as well as graphics/video workloads

Some pretty impressive performance highlights:

- Up to 3× better pricing-performance for reasoning models like NVIDIA’s Llama Nemotron Super versus H100 GPU systems

- Up to 4× faster digital twin simulation, synthetic data generation, and similar workloads to systems using L40S GPUs.

To put it simply, the servers are designed for demanding workloads and deliver up to 30 PFLOPS FP4 compute and 3 PFLOPS RTX graphics performance, with all-to-all bandwidth reaching 800 GB/s (utilizing 8 RTX PRO 6000 GPUs and ConnectX-8 SuperNICs).

Enterprise-Grade Software and Ecosystem

- RTX PRO Servers are integrated with NVIDIA’s Enterprise software stack:

- NVIDIA AI Enterprise platform (microservices, AI frameworks, tools)

- Omniverse libraries, Cosmos world foundation models, and enablement of physical AI and digital twins

- Fully supported across Windows, Linux, and all the major hypervisors for seamless deployment.

They are part of the NVIDIA AI Factory-validated design. NVIDIA provides comprehensive support with end-to-end guidance on architecture, deployment, and management, thereby reducing the time required for AI-factory rollouts and mitigating deployment risks in the process.

Broad Access: On-premise Hardware and Cloud Options

NVIDIA RTX PRO Servers are provided through a broad ecosystem to suit your various deployment needs:

- On-premise hardware through global OEM partners: Cisco, Dell Technologies, HPE, Lenovo, Supermicro, and others

- Cloud instances already available on CoreWeave and Google Cloud, and AWS, Nebius, and Vultr will have offerings by the end of the year.

- This expansive access—from rugged, enterprise-grade hardware to variable cloud consumption—provides numerous entry points for enterprises at any stage of AI adoption.

Mid-tier and 2U form factors: Accessibility & Efficiency

NVIDIA is also delivering accessible implementations of RTX PRO:

Mid-tier RTX PRO Servers were noted as a lower-cost entry point— with up to a 45× performance improvement from moving traditional CPU workloads to GPU acceleration.

TSMC, for example, is utilizing this technology to launch 3D virtual digital twins of its fabs, reducing simulation durations from weeks to seconds.

At SIGGRAPH 2025, NVIDIA announced a 2U rack-mount RTX PRO 6000 server featuring a pair of Blackwell GPUs, enabling denser and more energy-efficient deployments.

The advantages include 45 times better performance and 18 times better energy efficiency than 2U single-CPU systems with air-cooled setups. Partners such as Dell (R7725 2U), Cisco, HPE, Lenovo, Supermicro, and others all offer small form factor.

Strategic impact: Partner ecosystem & channel opportunity

Channel partners and service providers are being positioned to pivot away from traditional services, towards AI-centric managed services with NVIDIA RTX PRO Servers. With a way to leverage NVIDIA’s entire platform, partners can access new markets for virtual desktops, simulation-as-a-service, robotics, AI agents, and physical AI, across a wide range of industries and use cases.

Summary: A Pivotal Moment for Enterprise AI Infrastructure

The introduction of NVIDIA RTX PRO Servers signifies a pivotal moment, with:

- Enterprise-ready AI infrastructure that works with your data centers

- Significant advancements in performance across AI, simulation, graphics, design, and much more

- Flexible accessibility through on-prem systems or cloud

- Configurable and capable of scaling all of it, with mid-tier and 2U appliances

- Strong ecosystem of partners enabling new business models, regionally driven AI adoption

As we enter the new AI era, NVIDIA RTX PRO Servers offer organizations a practical, robust, and future-proof foundation for rapidly transforming AI factories into operational realities, all while ensuring safety and sustainability.