Updates

- Web scraping requires proxies to ensure anonymity, avoid IP bans, and access geo-restricted data safely.

- Web scraping methods include custom scrapers, headless browsers, or no-code tools—choose based on your skills and budget.

- Web scraping helps businesses make smarter decisions by extracting real-time data from the ever-growing web.

We’ve all heard that data is the modern-day fuel. Companies worldwide are fighting tooth and nail to get their hands on as much information as possible. And to fulfill their data needs, they’re looking at the web.

So, why is web data so important?

Businesses need data to make better decisions. They want to predict customer behavior and market trends so they can adapt and serve their customers accordingly. They also want to keep an eye on their customers to ensure they’re not lagging behind. In short, businesses need data to know what they’re doing right or wrong, and what should be done next.

With an increasing number of people using the internet, the web has erupted as a gold mine of data. A GovLab Index summary suggested that 90% of the present-day data available online has been created in the last two years. So, companies have a lot of useful information to extract, provided that they do it correctly.

In this post, we’ll delve into different methods of scraping the web safely.

Why are Proxies Essential in Data Scraping?

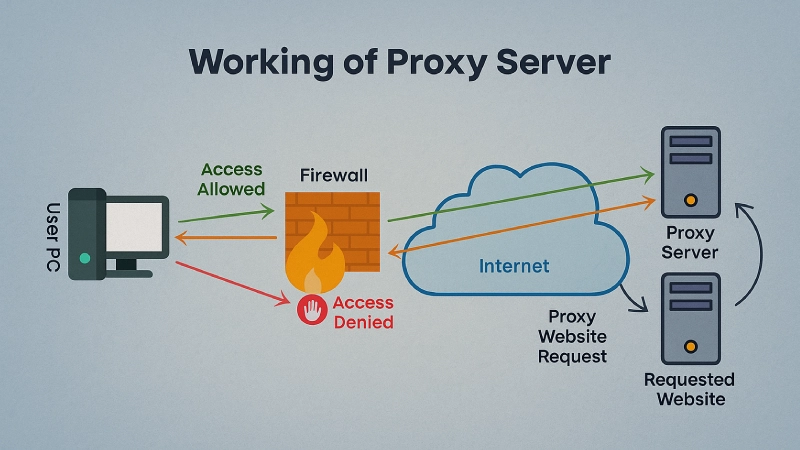

A proxy is a third-party server that allows you to route your requests through different servers. When you make a request to a website using a proxy, the IP address displayed is of the proxy, allowing you to scrape safely and anonymously.

There are several reasons why you should use a proxy server when scraping the web, such as:

- Proxies enable you to crawl a site without the risk of your spider getting blocked or banned.

- With proxies, you can distribute a high number of requests to multiple servers. As most anti-scraping tools work on volume detection, proxies help you scrape the web without detection.

- A proxy server allows you to make requests from a specific geographic region. This can be helpful if your target website has location-based restrictions.

- Proxies help you bypass blanket IP bans that can stop you from scraping a website.

- With proxies, you can send unlimited concurrent sessions to a website.

That said, it’s also essential to use the right type of proxies for the best results.

Some of the best proxy types of web scraping are:

- Residential Proxies: Residential proxies for web scraping use the IP address provided by an internet provider. These proxies are associated with an IP address of real, physical devices, making them almost impossible to detect.

- Datacenter IPs: A datacenter proxy is a private proxy server that has no affiliation with an ISP. These proxies come from secondary sources and provide a higher level of anonymity. They are faster than residential proxies. But on the downside, they have a computer-generated IP address, which might be easy to detect for some anti-scraping tools.

- Static Residential Proxies: These proxies are a combination of residential and data center proxies. These proxies are developed by data centers but distributed by ISPs. As a result, they’re as fast as datacenter proxies and as secure as residential proxies.

How to gather data?

Once you’ve set up your proxy server, it’s time to move on to the actual extraction process. Here, you’ve got three options:

1. Build your own Web Scraper

If you have expert developers in your team who know web scraping with Python, you can develop your web scraper. However, this option is not the most feasible. It requires a significant amount of time and effort to create a web scraper.

Besides, custom-made scrapers have limited functionality and aren’t ideal for multiple websites and markets. With websites readily deploying anti-scraping programs, self-coded scrapers are likely to struggle.

Those who understand the difference between web scraping vs. crawling will realize that custom scrapers are best suited for crawling purposes.

2. Use a Headless Browser

Headless browsers are web browsers with no user interface. They behave like normal browsers, but they can be programmed to follow specific instructions. These browsers are ideal for running automatic quality assurance tests and also work well for scraping websites. Some popular examples of headless browsers include Selenium and Puppeteer.

3. Use Web Scraping Software

The use of anti-scraping tools is on the rise. Website owners are readily focusing on blocking any web scraping attempts. Web scraping tools, like Mozenda and Outwit, help you overcome this hurdle.

These tools automate the entire web scraping process, so you don’t need to perform coding and programming. This makes it easy for a business to extract data without any technical capabilities.

Conclusion

Web scraping technologies have advanced significantly over the past decade. Data extraction from the web has become an essential part of data analytics and management strategy for SMEs and enterprises.

However, to scrape the web successfully, you need to follow the right scraping method. So, pick the best proxy and decide on a scraping method that aligns with your budget and resources.