Highlights

- The rollout of YouTube AI has always been framed to empower creativity, but recent experiments show how fragile that promise can be.

- What seemed like a minor tweak in video quality has instead opened a larger conversation about ownership, consent, and trust.

- For everyday viewers, the difference may be barely noticeable, but for creators, it raises many unsettling questions.

When YouTube announced its latest AI tools earlier in 2025, the platform framed them as creative enhancers, with features such as auto-dubbing, AI-generated backgrounds, and smarter inspiration tools meant to unlock new ways for people to make videos. But the conversation around YouTube AI has shifted dramatically in recent weeks. A BBC Future report revealed that YouTube had been quietly applying machine-learning techniques to certain YouTube Shorts, enhancing image clarity and reducing noise, all without creators’ knowledge or consent.

For casual viewers, the update may sound harmless; after all, who does not want a clearer, sharper video? Yet for many creators, this experiment felt like a breach of trust. The controversy raises larger questions about how platforms should balance innovation with user autonomy in the age of AI.

The Quiet Experiment.

According to the BBC investigation, YouTube was using machine-learning systems, distinct from generative AI, to automatically clean up videos during processing. This included reducing blur, denoising footage, and subtly improving compression. The platform confirmed that the tests were limited to Shorts, YouTube’s short-form video format, and insisted the goal was simply to improve video quality in ways similar to what modern smartphones already do.

The problem was not the technology itself, but rather how it was deployed. Creators noticed changes in their videos without being notified or given the option to turn off the feature. In online forums, many expressed frustrations that their content had already been altered after upload. For people who rely on YouTube as a means for their livelihood, even minute adjustments can undermine the consistency of their brand.

YouTube’s Response.

After the backlash, YouTube’s Insider account posted on X (formerly Twitter) to clarify the entire situation. The company emphasized that the test did not involve generative AI in any way, nor was it an attempt to upscale footage beyond its original quality. Instead, they described it as a traditional machine-learning experiment aimed at “unblurring, denoising, and improving clarity.”

From YouTube’s perspective, the distinction mattered. The company has been extremely vocal about its philosophy regarding AI, outlining on its “How YouTube Works” page that AI should empower creativity, rather than acting as a replacement. They also highlight responsible use, including requiring disclosure when videos contain realistic AI-altered content, particularly in sensitive contexts like politics or news. In their view, small enhancements to YouTube Shorts were just another step in maintaining quality for viewers.

But many creators were not convinced. Even if the intentions were positive, the lack of communication reinforced the fears that platforms see user content as something they can modify at will.

Why Creators are Concerned.

To understand the pushback, it helps to consider the creator’s perspective. For professional YouTubers, every detail in a video, from colour grading to background noise, is most often a creative choice. Having the platform silently intervene erases that control. Even if the changes are minor, it sets a precedent that the platform’s judgment can override the creator’s intent.

Beyond artistic integrity, there is also the issue of trust. Many felt blindsided that YouTube was experimenting on their content without consent. Discussions on many web forums captured this frustration, with many users suggesting that if YouTube truly believed in the feature, it could have been rolled out as an optional “enhance” button rather than a background process.

For creators, the episode shows a recurring tension with big platforms: innovations are often rolled out for the benefit of viewers, advertisers, or the platform’s bottom line, but creators bear the risks when things go wrong.

The AI Picture in Modern Times.

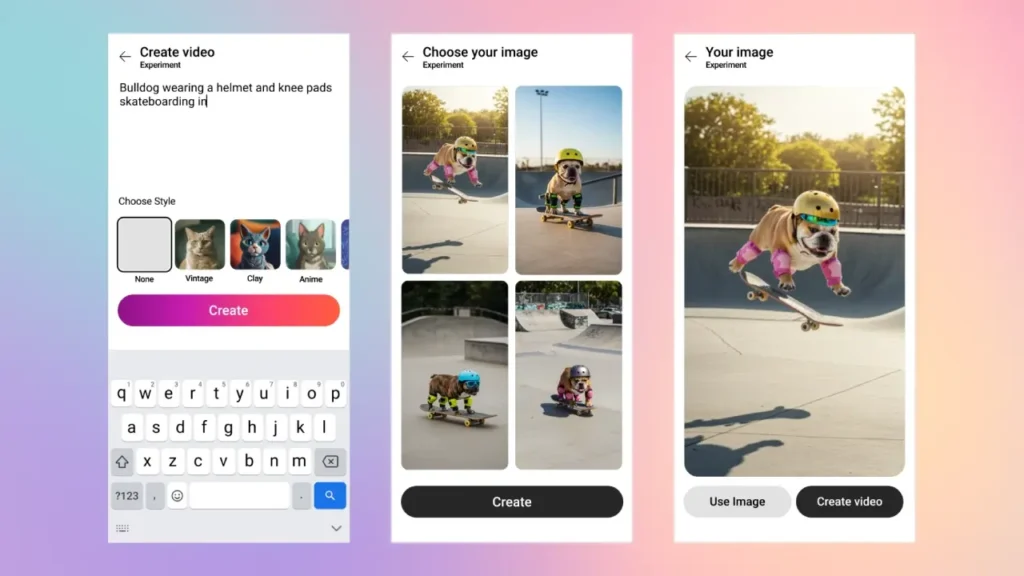

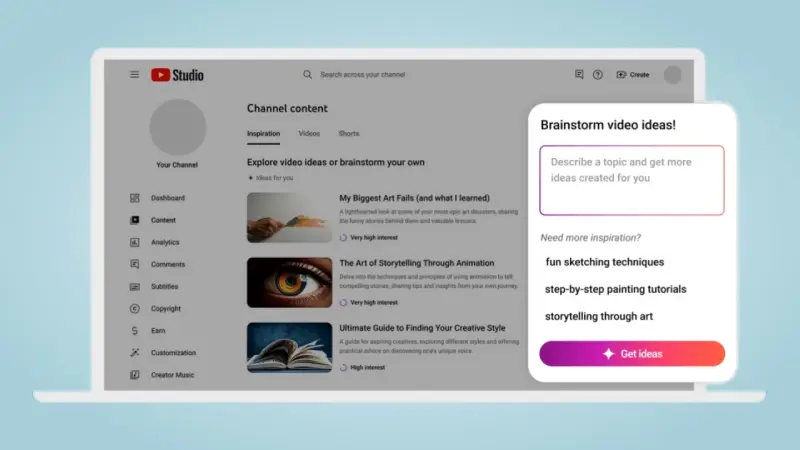

This controversy comes at a time when YouTube is investing heavily in AI. The platform has rolled out tools like Dream Screen, which generates video backgrounds; Auto Dubbing, which automatically translates content into multiple languages; and new AI-driven recommendation and ideation features inside YouTube Studio.

These features have been generally well-received, especially because they are clearly labelled and offered as optional aids to creators. YouTube has also introduced policies requiring disclosure for realistic AI-generated content, alongside tools that allow individuals to request the removal of videos that simulate their likeness or voice. In these cases, AI is framed as a supportive tool, not an invisible hand altering work behind the scenes.

That’s why the YouTube Shorts experiment hit such a nerve. It contradicted the company’s public emphasis on transparency and choice. If AI is meant to empower, critics argue, then why were creators left out of the loop?

Walking the Tightrope Between Progress and Consent.

The incident highlights a deeper dilemma for platforms like YouTube: how to innovate rapidly without undermining the very communities that sustain them. On one side, there is a strong case for using AI to improve technical quality, reduce bandwidth costs, and offer viewers a better experience. On the other hand, the people who create the content want to retain control over how their work is presented.

The solution may lie in balance. Offering opt-in settings, clearly labelling, and open communications would allow creators to decide whether enhancements align with their vision. Transparency can turn potential backlash into collaboration, ensuring that AI experiments are seen as opportunities rather than intrusions.

As YouTube continues to expand its AI offerings, it will need to reckon with the various questions its users and creators will raise. Innovation may keep them ahead, but it is trust that keeps creators, and their audiences, coming back.