Table of Contents

Highlights

- Uber and NVIDIA have partnered to develop a smarter and safer AI-Powered Robotaxi by combining Uber’s real-world ride data with NVIDIA’s advanced AI systems.

- NVIDIA will use DGX Cloud and Cosmos World to train autonomous models capable of handling complex driving scenarios.

- The collaboration aims to bring fully autonomous vehicles to Uber’s ridesharing platform, reshaping urban mobility and the future of transportation.

Uber (NYSE: UBER) and NVIDIA (NASDAQ: NVDA) have entered a deep partnership to accelerate large-scale autonomous mobility. The plan is to bring Level 4-ready AI-Powered Robotaxi — vehicles capable of driving themselves with no human driver under defined conditions — directly into Uber’s ride-hailing platform.

This is not positioned as a small pilot. Uber aims to begin scaling a global autonomous fleet starting in 2027. Over time, the companies aim to target up to 100,000 Level 4-ready vehicles on Uber’s network.

The idea is simple for the rider: open the Uber app and, alongside a human-driven ride option, eventually see an option to book an autonomous vehicle powered by NVIDIA’s AI stack.

A Renewed Push to Autonomous Mobility

Uber and NVIDIA are trying to solve the most challenging part of self-driving at scale: not just making a demo car drive, but making tens of thousands of vehicles operate safely in real cities, under real traffic rules, with real passengers.

The core approach is to merge two strengths:

- Uber’s global driving data, gathered from millions of trips across airports, dense city intersections, unpredictable weather, night driving, and local driving behaviors.

- NVIDIA’s AI training, simulation, in-vehicle compute, and safety stack is explicitly built for Level 4 autonomy.

To accelerate this loop, Uber and NVIDIA are building a joint AI data factory powered by NVIDIA Cosmos, NVIDIA’s world-scale platform for developing foundation models. Cosmos curates and processes enormous amounts of real-world and synthetic driving data, helping train increasingly capable autonomous driving models. NVIDIA will also use DGX Cloud for heavy AI training and large-scale simulation.

The goal is continuous improvement: Uber’s ride data feeds training; NVIDIA’s models learn from it; improved models are deployed back into the vehicles.

NVIDIA’s Technology Powering the Vision

The backbone of the plan is NVIDIA’s new DRIVE AGX Hyperion 10 platform.

What is DRIVE AGX Hyperion 10?

It’s a production-ready compute and sensor architecture designed to make any vehicle “Level 4-ready.” Automakers can adopt it as the baseline electronics, compute, and perception hardware for their upcoming AI-Powered Robotaxi, vans, shuttles, and autonomous trucks, instead of trying to build everything from scratch.

Hyperion 10 includes:

- The DRIVE AGX Thor in-vehicle platform is built on NVIDIA Blackwell architecture.

Each unit delivers more than 2,000 FP4 teraflops (around 1,000 TOPS INT8) of real-time compute. That level of computing is meant to fuse data from all sensors, run transformer-scale AI models, and make split-second driving decisions safely. - A qualified, full-surround sensor suite: up to 14 HD cameras, nine radars, one lidar, and 12 ultrasonic sensors.

This gives the vehicle 360-degree awareness in complex environments, such as city streets. - A safety-certified automotive operating system (DriveOS) and NVIDIA’s DRIVE AV software stack for perception, planning, and control in Level 4 scenarios.

Because Hyperion 10 is modular and validated, manufacturers can start with a known-good autonomous reference design rather than reinventing hardware, thermal, safety, and sensor placement for each new vehicle. That speeds rollout and lowers cost per vehicle.

For Uber, this matters. A standardized Level 4-ready platform means Uber doesn’t have to customize the app for every new autonomous partner deeply. The network can onboard different AI-Powered Robotaxi suppliers, as long as they align with NVIDIA’s Hyperion 10/DRIVE stack. More reliable when the autonomous systems are on the road.

Why Uber Chose Partnership Instead of Rebuilding In-House

Uber has worked on autonomy before, including running its own self-driving unit, but later chose not to keep building a complete hardware/software autonomy stack internally.

This new model is cleaner:

- Uber contributes demand (riders) and data (edge-case driving situations from real trips).

- NVIDIA contributes the AI stack, training infrastructure, and in-vehicle compute needed to enable autonomous driving.

- Automakers and autonomy partners contribute Level 4-ready vehicles built on a common platform (Hyperion 10 + DRIVE AGX Thor).

For Uber, the benefits are speed, lower R&D cost, and a realistic path to deploy at city scale without reinventing an entire automotive engineering pipeline.

For NVIDIA, it’s a way to cement its position not just in data centers, but in “physical AI” — AI acting in the real world, on roads, carrying passengers, moving freight.

A Growing Level 4 Ecosystem Around NVIDIA DRIVE

This plan is not just Uber and NVIDIA working in isolation. Multiple automakers and autonomy firms are already aligning with NVIDIA’s Level 4 platform:

- Stellantis

Developing AV-Ready Platforms tuned for AI-Powered Robotaxi duty cycles. These platforms integrate NVIDIA’s full-stack AI and are being built to interoperate with Uber’s global mobility network. Stellantis is also working with Foxconn on hardware and systems integration. - Lucid

Advancing Level 4 autonomous capabilities for its next-generation premium passenger vehicles in the U.S., using NVIDIA’s DRIVE Hyperion platform and AV software stack. - Mercedes-Benz

Exploring collaboration using its own MB.OS alongside NVIDIA DRIVE AGX Hyperion. The vision: a chauffeured, Level 4 luxury experience that combines autonomy, safety, and high-end comfort in vehicles like the S-Class. - Aurora, Volvo Autonomous Solutions, Waabi

Applying the same NVIDIA DRIVE platform to long-haul freight. These companies are building Level 4 autonomous trucks on NVIDIA DRIVE AGX Thor. So the same core NVIDIA autonomy stack that powers urban AI-Powered Robotaxi can also move goods across highways with little or no human intervention. - Other autonomy players

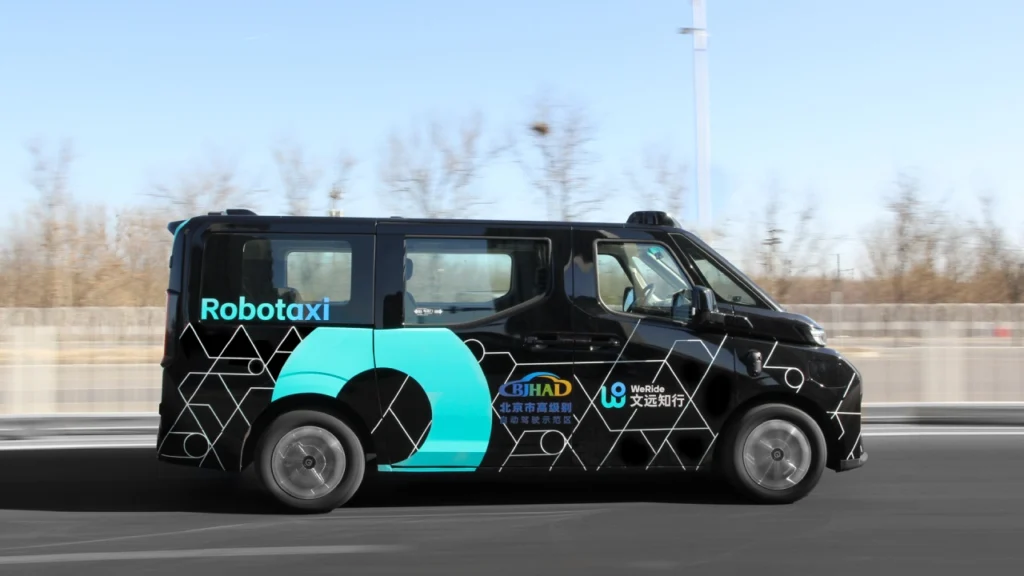

Avride, May Mobility, Momenta, Nuro, Pony.ai, Wayve, and WeRide are all developing Level 4 software stacks on top of NVIDIA DRIVE. That means a wide range of passenger mobility, shuttle, and delivery use cases could plug into the same ecosystem.

This matters because Uber’s network is essentially a marketplace. Suppose multiple vehicle makers and autonomy teams adopt a compatible NVIDIA platform. In that case, Uber can source autonomous supply from various vendors across numerous cities through a single app.

Safety, Certification, and Trust: NVIDIA Halos

Scaling AI-Powered Robotaxi isn’t just a technical problem. Cities and regulators have to approve them.

To address that, NVIDIA has introduced NVIDIA Halos, a new safety and certification framework for physical AI systems such as autonomous vehicles.

Halos include:

- An AI Systems Inspection Lab dedicated to auditing safety, cybersecurity, and reliability from “cloud to car.”

- The Halos Certified Program is meant to evaluate and certify autonomous platforms against strict safety criteria before large-scale deployment.

Companies such as AUMOVIO, Bosch, Nuro, and Wayve are among the first involved. The lab is accredited by the ANSI Accreditation Board, which gives these audits regulatory weight.

In plain terms: NVIDIA is trying to answer the most significant policy questions up front — Who’s liable? Is the AI safe? Can it be trusted at the city scale? — so that governments are more comfortable letting thousands of Level 4 vehicles operate on public streets.

What this means for drivers, cities, and riders

For riders:

The pitch is more reliable mobility — especially late at night, around airports, or in areas where human drivers are limited. The experience should feel like booking a regular Uber ride, except the vehicle may drive itself using NVIDIA’s AI.

For current human drivers:

Work doesn’t disappear instantly, but it changes. Over time, roles shift from physically driving to managing, overseeing, maintaining, charging, cleaning, and supporting fleets of autonomous vehicles in the field. The driver becomes the operator of the fleet, not the operator of the steering wheel.

For cities:

Benefits could include fewer human-error crashes, more consistent driving behavior, and potentially more efficient road use.

But cities will also have to solve new questions:

- How do insurance and liability work with no human at the wheel?

- What new regulations govern AI-driven vehicles at scale?

- How should infrastructure and communication systems evolve to enable Level 4 fleets to move efficiently?

This is why the safety/certification layer (Halos) is not cosmetic. It’s a requirement for public approval.

Why This Partnership Matters in the Tech World

The robotaxi industry has spent years stuck in pilot mode: a few cars in a geofenced downtown, lots of hype, no global scale.

The Uber–NVIDIA partnership is built to break out of that pattern using three levers:

- Scale is defined up front.

Uber and NVIDIA are openly targeting a 100,000-vehicle Level 4-ready fleet, with ramp beginning in 2027 — not an indefinite “sometime.” - The hardware/software stack is standardized.

NVIDIA DRIVE AGX Hyperion 10 and DRIVE AGX Thor give automakers a validated Level 4-ready baseline (compute, sensors, safety OS, autonomy software). This reduces fragmentation and speeds production. - The data flywheel is massive.

Uber’s real-world ride data feeds NVIDIA’s AI training (DGX Cloud + Cosmos). Updated autonomy models are pushed back into Level 4-ready vehicles via an ecosystem of automakers and autonomy partners. The loop gets stronger with every mile.

Put simply, Uber brings demand and data. NVIDIA brings the AI brain and certified safety stack. Automakers bring the physical fleets. Regulators get an audit path and approve.

If all of that holds, booking an autonomous ride in the Uber app stops being science fiction and starts looking like a 2027+ product roadmap — not just for passengers in cities, but also for autonomous freight on highways.

The future here is not just “self-driving cars exist.”

It’s “self-driving supply exists at scale, on a platform people already use.” It’s a fleet-size ambition. at a time.